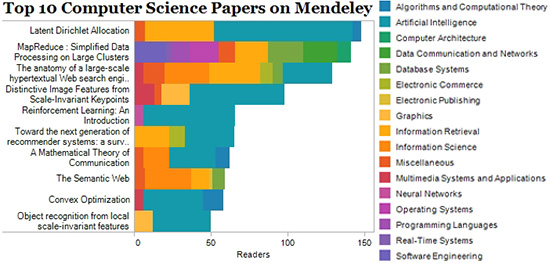

Binary battle to promote applications built on the Mendeley API (now including PLoS

as well), Now take a look at the data to see what people have

to work with. The analysis is focused on our second largest discipline,

Computer Science. Biological Sciences is the largest,

but I started with this one so that I could look at the data with fresh

eyes, and also because it’s got some really cool papers to talk about.

It was a fascinating list of topics, with many of the expected fundamental

papers like Shannon’s Theory of Information and the Google paper, a

strong showing from Mapreduce and machine learning, but also some

interesting hints that augmented reality may be becoming more of an

actual reality soon.

1. Latent Dirichlet Allocation (available full-text)

LDA is a means of classifying objects, such as documents, based on

their underlying topics. I was surprised to see this paper as number one

instead of Shannon’s information theory paper (#7) or the paper

describing the concept that became Google (#3). It turns out that

interest in this paper is very strong among those who list artificial

intelligence as their subdiscipline. In fact, AI researchers contributed

the majority of readership to 6 out of the top 10 papers. Presumably,

those interested in popular topics such as machine learning list

themselves under AI, which explains the strength of this subdiscipline,

whereas papers like the Mapreduce one or the Google paper appeal to a

broad range of subdisciplines, giving those papers a smaller numbers

spread across more subdisciplines. Professor Blei is also a bit of a superstar, so that didn’t hurt. (the irony of a manually-categorized list with an LDA paper at the top has not escaped us)

2. MapReduce : Simplified Data Processing on Large Clusters (available full-text)

It’s no surprise to see this in the Top 10 either, given the huge

appeal of this parallelization technique for breaking down huge

computations into easily executable and recombinable chunks. The

importance of the monolithic “Big Iron” supercomputer has been on the

wane for decades. The interesting thing about this paper is that had

some of the lowest readership scores of the top papers within a

subdiscipline, but folks from across the entire spectrum of computer

science are reading it. This is perhaps expected for such a general

purpose technique, but given the above it’s strange that there are no AI

readers of this paper at all.

3. The Anatomy of a large-scale hypertextual search engine (available full-text)

In this paper, Google founders Sergey Brin and Larry Page discuss how

Google was created and how it initially worked. This is another paper

that has high readership across a broad swath of disciplines, including

AI, but wasn’t dominated by any one discipline. I would expect that the

largest share of readers have it in their library mostly out of

curiosity rather than direct relevance to their research. It’s a

fascinating piece of history related to something that has now become

part of our every day lives.

This paper was new to me, although I’m sure it’s not new to many of

you. This paper describes how to identify objects in a video stream

without regard to how near or far away they are or how they’re oriented

with respect to the camera. AI again drove the popularity of this paper

in large part and to understand why, think “Augmented Reality“. AR is the futuristic idea most familiar to the average sci-fi enthusiast as Terminator-vision.

Given the strong interest in the topic, AR could be closer than we

think, but we’ll probably use it to layer Groupon deals over shops we

pass by instead of building unstoppable fighting machines.

5. Reinforcement Learning: An Introduction (available full-text)

This is another machine learning paper and its presence in the top 10

is primarily due to AI, with a small contribution from folks listing

neural networks as their discipline, most likely due to the paper being

published in IEEE Transactions on Neural Networks. Reinforcement

learning is essentially a technique that borrows from biology, where the

behavior of an intelligent agent is is controlled by the amount of

positive stimuli, or reinforcement, it receives in an environment where

there are many different interacting positive and negative stimuli. This

is how we’ll teach the robots behaviors in a human fashion, before they

rise up and destroy us.

6. Toward the next generation of recommender systems: a survey of the state-of-the-art and possible extensions (available full-text)

Popular among AI and information retrieval researchers, this paper

discusses recommendation algorithms and classifies them into

collaborative, content-based, or hybrid. While I wouldn’t call this

paper a groundbreaking event of the caliber of the Shannon paper above, I

can certainly understand why it makes such a strong showing here. If

you’re using Mendeley, you’re using both collaborative and content-based

discovery methods!

7. A Mathematical Theory of Communication (available full-text)

Now we’re back to more fundamental papers. I would really have

expected this to be at least number 3 or 4, but the strong showing by

the AI discipline for the machine learning papers in spots 1, 4, and 5

pushed it down. This paper discusses the theory of sending

communications down a noisy channel and demonstrates a few key

engineering parameters, such as entropy, which is the range of states of

a given communication. It’s one of the more fundamental papers of

computer science, founding the field of information theory and enabling

the development of the very tubes through which you received this web

page you’re reading now. It’s also the first place the word “bit”, short

for binary digit, is found in the published literature.

8. The Semantic Web (available full-text)

In The Semantic Web, Tim Berners-Lee, Sir Tim, the inventor of the

World Wide Web, describes his vision for the web of the future. Now, 10

years later, it’s fascinating to look back though it and see on which

points the web has delivered on its promise and how far away we still

remain in so many others. This is different from the other papers above

in that it’s a descriptive piece, not primary research as above, but

still deserves it’s place in the list and readership will only grow as

we get ever closer to his vision.

9. Convex Optimization (available full-text)

This is a very popular book on a widely used optimization technique

in signal processing. Convex optimization tries to find the provably

optimal solution to an optimization problem, as opposed to a nearby

maximum or minimum. While this seems like a highly specialized niche

area, it’s of importance to machine learning and AI researchers, so it

was able to pull in a nice readership on Mendeley. Professor Boyd has a very popular set of video classes

at Stanford on the subject, which probably gave this a little boost, as

well. The point here is that print publications aren’t the only way of

communicating your ideas. Videos of techniques at SciVee or JoVE or recorded lectures (previously) can really help spread awareness of your research.

10. Object recognition from local scale-invariant features (available in full-text)

This is another paper on the same topic as paper #4, and it’s by the

same author. Looking across subdisciplines as we did here, it’s not

surprising to see two related papers, of interest to the main driving

discipline, appear twice. Adding the readers from this paper to the #4

paper would be enough to put it in the #2 spot, just below the LDA

paper.

No comments:

Post a Comment